How does the native Go http client deal with failures?

An issue at work where a client written in Go kept a TCP connection open to a backend that was struggling with high load made me ask this question. Which then turned into a rabbit hole of other questions.

A brief high level overview of the steps required to make an HTTP request is a DNS lookup, TCP + TLS connection setup, and then the HTTP request.

Let’s first look at how Go resolves DNS records. I’ve been using Go 1.17 for all these examples.

DNS

First, the Go standard library has two resolvers, native (written in pure go) and cgo.

Use export GODEBUG=netdns=2 when running the program to see what resolver is picked.

When running on a mac, Go uses cgo, go package net: using cgo DNS resolver. To get the details on how and why Go

selects which resolver to use check out the official docs.

You can force a specific resolver with GODEBUG=netdns=go,2.

These resolvers are responsible for turning a dns record like ha.dmicheltest.com into an IP address for the client to than establish

a TCP connection to.

TCP/Transport

Once the client has an IP address, it attempts to establish a TCP connection to the server (3-way handshake). Once established, what happens if the server gets overloaded or has a hardware failure, making the IP address no longer available? Let’s take a look through some examples.

Locally, I have three web servers running on different IP addresses:

- 127.0.0.1

- 127.0.0.2

- 127.0.0.3

If you query the A record for ha.dmicheltest.com it will return 2 of the 3 records shuffled. The shuffling is configured on the

DNS provider. This gives the client basic load balancing when connecting to backends.

dig ha.dmicheltest.com +short

127.0.0.3

127.0.0.1

It’s rather typical for websites to return a few shuffled IP addresses for high availability. The client usually picks the first one and tries to connect to it. If that fails it tries the next one.

To see what Go is doing behind the scenes when making a request you can add some tracing.

clientTrace := &httptrace.ClientTrace{

GotConn: func(info httptrace.GotConnInfo) {

fmt.Println(fmt.Sprintf("conn was reused: %t %s", info.Reused, info.Conn.RemoteAddr()))

},

DNSStart: func(info httptrace.DNSStartInfo) {

fmt.Println(fmt.Sprintf("dns start: %s ", info.Host))

},

DNSDone: func(info httptrace.DNSDoneInfo) {

fmt.Println(fmt.Sprintf("dns done: %s %t ", info.Addrs, info.Coalesced))

},

ConnectStart: func(network, addr string) {

fmt.Println(fmt.Sprintf("conn start: %s %s", network, addr))

},

}

ctx := httptrace.WithClientTrace(context.Background(), clientTrace)

req, err := http.NewRequestWithContext(ctx, "GET", "ha.dmicheltest.com", nil)

With tracing enabled, it’s easy to see in the logs the DNS lookup and connection setup. If the connection is successful, it will reuse it when keep-alive is enabled (by default).

dns start: ha.dmicheltest.com

dns done: [{127.0.0.3 } {127.0.0.1 }] false

conn start: tcp 127.0.0.3:1323

conn was reused: false 127.0.0.3:1323

host: ha.dmicheltest.com status: 200 body: Hello, World from 127.0.0.3

conn was reused: true 127.0.0.3:1323

host: ha.dmicheltest.com status: 200 body: Hello, World from 127.0.0.3

As long at the TCP connection hasn’t been closed Go will keep sending requests over that connection. As soon as that

connection is closed (can be for many reasons) then Go will re-resolve the DNS record. If I stop the http server on

127.0.0.3, Go will re-resolve the DNS record and connect to another IP address.

dns start: ha.dmicheltest.com

dns done: [{127.0.0.3 } {127.0.0.1 }] false

conn start: tcp4 127.0.0.3:1323

conn start: tcp4 127.0.0.1:1323

conn was reused: false 127.0.0.1:1323

host: ha.dmicheltest.com status: 200 body: Hello, World from 127.0.0.1 fail: false

And if all the servers are down…

dns start: ha.dmicheltest.com

dns done: [{127.0.0.1 } {127.0.0.2 }] false

conn start: tcp4 127.0.0.1:1323

conn start: tcp4 127.0.0.2:1323

error sending request Get "http://ha.dmicheltest.com:1323": dial tcp4 127.0.0.1:1323: connect: connection refused

This is now where the rabbit hole begins.

AAAA - IPv6

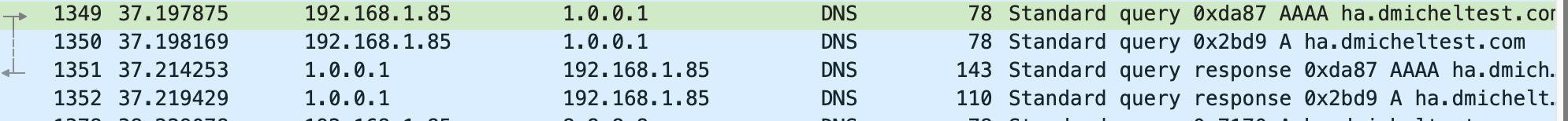

As I was investigating everything I was running wireshark in the background.

I noticed two queries being sent to the resolver for different DNS record types, A and AAAA. I found the AAAA interesting

since my environment doesn’t have IPv6 enabled.

It turns out Go will attempt to resolve both record types by default. To not make this additional AAAA query you

can create a custom transport and specify tcp4 for the network.

// disable AAAA/IPv6 DNS lookup

transport := defaultTransport.(*http.Transport).Clone()

transport.DialContext = func(ctx context.Context, network, addr string) (net.Conn, error) {

return zeroDialer.DialContext(ctx, "tcp4", addr)

}

You can also do the same for a custom DNS resolver but specify ip4 since DNS can use udp or tcp.

dnsResolver := &net.Resolver{

Dial: func(ctx context.Context, network, address string) (net.Conn, error) {

d := net.Dialer{

Timeout: time.Millisecond * time.Duration(10000),

}

fmt.Println(fmt.Sprintf("network: %s address: %s", network, address))

return d.DialContext(ctx, network, address)

},

}

addr, err := dnsResolver.LookupIP(context.Background(), "ip4", "ha.dmicheltest.com")

Okay, that solves the extra AAAA lookup. What would happen if ha.dmicheltest.com also returned an AAAA record?

Let’s add one and use the same client setup as above.

dns start: ha.dmicheltest.com

dns done: [{::1 } {127.0.0.1 } {127.0.0.2 }] false

conn start: tcp [::1]:1323

conn was reused: false [::1]:1323

host: ha.dmicheltest.com status: 200 body: Hello, World from [::1].

Well, that’s interesting the IPv6 address is selected. Why is that? According to rfc6724 in dual-stack implementations the default address selection algorithm can prefer IPv6 over IPv4. This is done so clients can start to make the actual transition to IPv6 gracefully if the server supports it.

What would happen if something was wrong with your IPv6 connection. Would you as a user be happy with the page not loading? This is where rfc6555 comes into play which is aptly named “Happy Eyeballs.”

When a server’s IPv4 path and protocol are working, but the server’s IPv6 path and protocol are not working, a dual-stack client application experiences significant connection delay compared to an IPv4-only client. This is undesirable because it causes the dual- stack client to have a worse user experience.

The Go client will kick off nearly concurrent IPv4 and IPv6 connections, using a FallbackDelay which defaults to 300ms. If

the IPv6 connection takes longer than the delay it will revert back to IPv4.

FallbackDelay specifies the length of time to wait before spawning a RFC 6555 Fast Fallback connection. That is, this is the amount of time to wait for IPv6 to succeed before assuming that IPv6 is misconfigured and falling back to IPv4.

// An example of configuring a transport with a FallbackDelay.

var defaultTransport http.RoundTripper = &http.Transport{

DialContext: (

&net.Dialer{

Timeout: 30 * time.Second,

KeepAlive: 30 * time.Second,

FallbackDelay: 300,

}).DialContext,

ForceAttemptHTTP2: true,

MaxIdleConns: 100,

IdleConnTimeout: 90 * time.Second,

DisableKeepAlives: false,

}

Wrapping Up

Why did I mention the different types of resolvers (native go and cgo) and AAAA records as part of this post? When

I was creating test clients and DNS records for ha.dmicheltest.com,

I inadvertently left off the Shuffle filter chain and was puzzled why the client was always connecting

to the same set of IP addresses.

Was the Go resolver, sorting the results? I was convinced it was so off to read the source code and do some Googling.

This is when I stumbled across two really interesting posts by the engineering team over at Grab Troubleshooting Unusual AWS ELB 5XX Error and DNS Resolution in Go and Cgo.

The posts go on to explain in great detail the odd behavior they were seeing with the frontend load balancers not having traffic

be evenly distributed between them. It turns out when the Go team was writing the native Go DNS resolver,

some part of RFC6724 and how getaddrinfo is implemented in glibc was missed which was causing IPv4 records

to be sorted!

Check out the full source for address selection, it’s not for the faint of heart. This is the commit that fixes the issue for Grab in the native Go resolver and restores the round-robin sort order that so much of the web depends on.

It turns out in my particular case the Go resolver was not responsible sorting the results, as it was a user misconfiguration in NS1. But it led me down an interesting path of learning more about RFC’s I only knew in passing.

Reading the source code to learn about how Go makes an HTTP request reminded me again how many details there are in these fundamental building blocks of the Internet.

This post only scratches the surface when talking about how Go and really any programming language attempts to do everything possible to have a reliable experience for your clients/users.

There are so many more topics to cover such as, TCP retransmissions, HTTP retries, etc that will have to wait for another day.

And it turns out my issue at work was related to Envoy hot-reloading, not the Go HTTP client.